Kubenetes Cluster Tutorial

If you are just beginning with Kubernetes I feel the best way to

learn it is to deploy your own cluster. This will be the first part

in a series of articles dealing with Kubernetes and related

technologies. In this tutorial we will create a small 2 node

Kubernetes cluster. We will create one master node and one worker

node.

First create 2 virtual machines. These do not need to be very

powerful. I will be using instances running Ubuntu 18.04 with a

single CPU, 2G of RAM and 20G of disk. Once your instances are up,

confirm that you can ping from one host to the other. It is a good

idea to perform a system update.

Note: all instructions will assume you are the root user. If you

aren't, prepend sudo where necessary!

We need to add information about both hosts to each instance's

/etc/hosts file. Add an entry like the following but substitute your IP

addresses and hostnames.

192.168.199.6 kubernetes-demo-worker-1 192.168.199.7 kubernetes-demo-master-1

Next we need to add the official Kubernetes repo to our sources list

on both hosts. You can do that with the following commands:

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - echo "deb http://apt.kubernetes.io/ kubernetes-xenial main" > /etc/apt/sources.list.d/kubernetes.list apt update

Kubernetes relies on underlying container technologies in order to do

its thing. For this tutorial we will be using Docker. The following

webpage gives instructions on how to install the container runtime correctly.

Even though the page states that the instructions are for Ubuntu 16.04, they

will work fine on Ubuntu 18.04. Scroll down and follow the instructions for

Docker.

Install Docker on all the nodes in your cluster.

https://kubernetes.io/docs/setup/cri/

After running the script contained on the webpage above you can make

sure that Docker is running with

root@kubernetes-demo-master-1:~# systemctl show -p SubState --value docker running root@kubernetes-demo-master-1:~# root@kubernetes-demo-worker-1:~# systemctl show -p SubState --value docker running root@kubernetes-demo-worker-1:~#

Now we are ready to install Kubernetes. This can be done simply with

apt. Install these packages on each node of your cluster.

apt install kubelet kubeadm kubectl

While those install, I will offer a brief explanation about each

package.

- kubelet - This is the main kubernetes 'agent' that runs on each node in your cluster. It will communicate with the underlying container runtime and tell it how to manage your containers.

- kubeadm - This is a cluster management tool provided by Kubernetes. We will use it to bootstrap the cluster.

- kubectl - You will use kubectl to interact with your cluster.

Finally, we are ready to bootstrap our cluster! We will use kubeadm

to bootstrap the cluster on the master node. When that is complete we will

join the worker node to the cluster. First lets look at the command.

kubeadm init --pod-network-cidr=10.60.0.0/16 --ignore-preflight-errors=NumCPU

We are giving two arguments to kubeadm init - pod-network-cidr and

ignore-preflight-errors=NumCPU

- pod-network-cidr - This specifies the ip range our pods will use to communicate internally with one another. It is important that you set this to something that will not overlap your physical network range. My nodes are on the 192.168.199.0/24 network which would overlap with 192.168.0.0/16. It is also important that you do not let this range overlap with the Kubernetes service-cidr which by default is at 10.96.0.0/12.

- ignore-preflight-errors=NumCPU - Since my instance has only 1 CPU allotted to it, kubeadm init will error out as the minimum number of CPUs allowed is 2. As this is a demo cluster for educational purposes we can instruct kubeadm to ignore this requirement. If your instance has 2 or more CPUs you will not need this flag.

After running the kubeadm init command you should see a message with

important information.

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.199.7:6443 --token lr1pus.1t9ks2hl30jz91wm \

--discovery-token-ca-cert-hash sha256:1f4e1ce5f92488d8fe01065bd6e11212e30302e294d0a87052d8163f86f1e70b

root@kubernetes-demo-master-1:~#

There are three important parts to this message.

- First we see instructions on how to create a

config file for kubectl to use. For kubectl to interact with the

apiserver it will need to use the proper certificate and know what

address the apiserver is using. By default kubectl will look for this

information in

$HOME/.kube/config. You should copy and paste these three lines.mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

- Next kubeadm advises us that we should deploy a pod network to our cluster. This is required for pod to pod communication to work correctly when using our pod network IP space. We will use Calico for this. Calico is an advanced network plugin for Kubernetes and probably overkill for this lab. But, you will likely often run into Calico in production clusters so we may as well learn about it now!

- You will also see instructions on how to join your worker nodes to the cluster. This will become important later, after we have deployed our pod network. You should copy this command down so we can use it later.

Go ahead and follow the instructions to create the $HOME/.kube/config

file. Then lets use kubectl to take a look at the state of our cluster

so far.

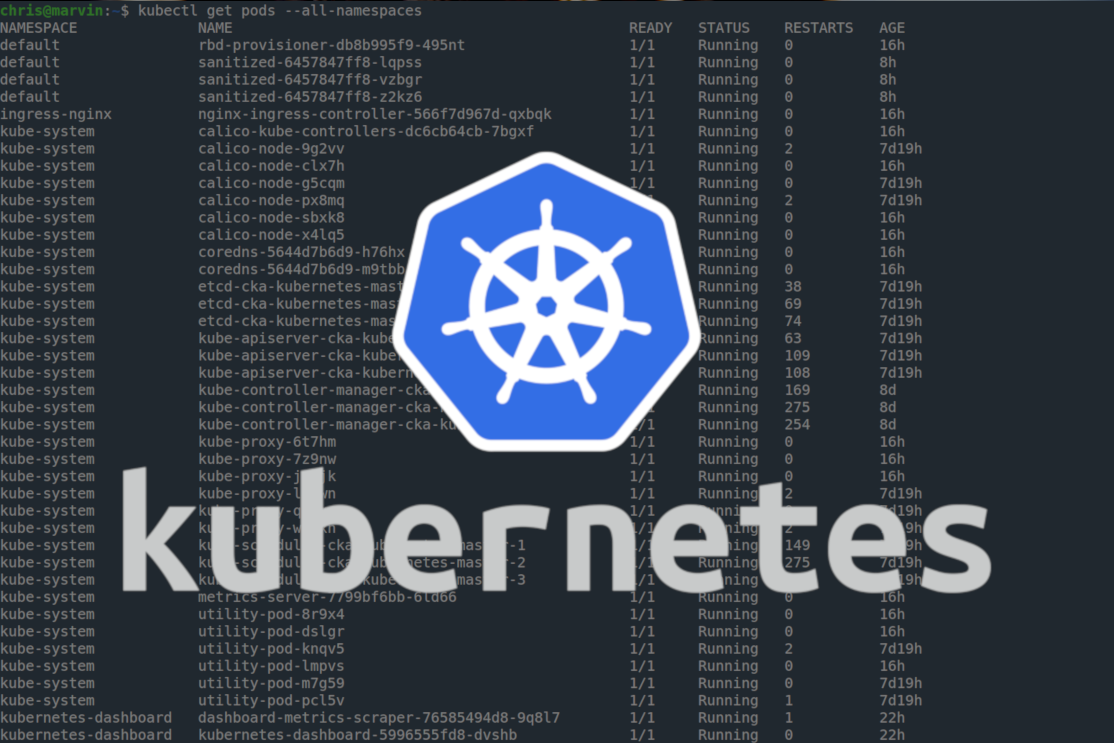

root@kubernetes-demo-master-1:~# mkdir -p $HOME/.kube root@kubernetes-demo-master-1:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config root@kubernetes-demo-master-1:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config root@kubernetes-demo-master-1:~# root@kubernetes-demo-master-1:~# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-6955765f44-ngnnq 0/1 Pending 0 13m kube-system coredns-6955765f44-rrngz 0/1 Pending 0 13m kube-system etcd-kubernetes-demo-master-1 1/1 Running 0 14m kube-system kube-apiserver-kubernetes-demo-master-1 1/1 Running 0 14m kube-system kube-controller-manager-kubernetes-demo-master-1 1/1 Running 0 14m kube-system kube-proxy-7fmbh 1/1 Running 0 13m kube-system kube-scheduler-kubernetes-demo-master-1 1/1 Running 0 14m root@kubernetes-demo-master-1:~#

We used kubectl get pods -A to list all pods in our

cluster. We can see that most pods have a 1/1 under ready and a status of

Running. Our coredns pods have a status of Pending and a ready state of

0/1. These two pods can not come into service until after we deploy our

pod networking. Before we do that lets take a look at some of these pods

to better understand what they do.

First a quick note about namespaces. Namespaces allow you to

isolate pods and users of your clusters. In Kubernetes your control plane

will be located in the kube-system namespace. We used the -A switch in our

kubectl command to let kubectl know we wanted to see pods in all namespaces.

If you use the get pods command without specifying a namespace you will be

looking inside the default namespace. There is nothing there yet!

- apiserver - This is the heart of your cluster. All your control plane elements will be communicating with the apiserver. When you issue kubectl commands kubectl is querying the apiserver.

- etcd - This is the main data store of Kubernetes. Information about what pods should be running where is stored here. The apiserver updates etcd and queries it to get information about your cluster.

- controller-manager - The controller manager watches the state of the cluster through the apiserver and tries to ensure that the intended state of the cluster matches the current state of the cluster.

- scheduler - When new pods are created the cluster must figure out where to put them. The scheduler looks for a node that has resources available for the pod and matches any labels specified by the pod.

- kube-proxy - Kube-proxy is responsible for facilitating communication into and out of pods. When services are created to expose ports in the cluster or on the node itself kube-proxy is at work. Kube-proxy can also do simple load balancing between pods.

- coredns - Coredns does what it sounds like it does. It provides DNS services to your pods. This is more important than it sounds at first. One of the main reasons that Kubernetes is so exciting is its ability to 'self-heal' or repair itself. If a pod unexpectedly crashes or one of your nodes goes down Kubernetes will recreate the pod on the node or on other nodes. These newly created pods probably have different IP addresses. Coredns will keep track of which pods have what IP addresses and match name to address for you.

This is a very high over view of these components and I left out a

lot of details. For now, lets move on to deploying Calico, our cluster

network.

Calico is a layer 3 networking plugin that we can use with Kubernetes.

It employs BGP to update and distribute your node's route table. You can

get a brief overview of it here:

https://docs.projectcalico.org/introduction/

At the time of writing this Calico 3.11 is the most recent release.

On your master node lets get the deployment yaml using the following

command.

root@kubernetes-demo-master-1:~# wget https://docs.projectcalico.org/v3.11/manifests/calico.yaml

This yaml file can be used to instruct your cluster to deploy Calico.

Before feeding it to Kubernetes we have to make a change. Remember when we

performed kubeadm init and we specified a pod-network-cidr? Well, we need

to make sure Calico is deployed using the same IP space. By default Calico

will try to use 192.168.0.0/16. I used 10.60.0.0/16

for my cluster so I will use sed to quickly correct the yaml.

root@kubernetes-demo-master-1:~# sed -i 's/192.168.0.0\/16/10.60.0.0\/16/g' calico.yaml root@kubernetes-demo-master-1:~#

Now simply tell Kubernetes to apply this yaml with

kubectl apply -f calico.yaml.

root@kubernetes-demo-master-1:~# kubectl apply -f calico.yaml configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.apps/calico-node created serviceaccount/calico-node created deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created root@kubernetes-demo-master-1:~#

Now we need to wait a bit for Calico to finish deploying and our

coredns pods to come into service.

You can check on how things are going with

kubectl get pods -A

root@kubernetes-demo-master-1:~# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-5b644bc49c-5pbnx 0/1 ContainerCreating 0 33s kube-system calico-node-4shdh 0/1 PodInitializing 0 34s kube-system coredns-6955765f44-ngnnq 0/1 ContainerCreating 0 60m kube-system coredns-6955765f44-rrngz 0/1 ContainerCreating 0 60m kube-system etcd-kubernetes-demo-master-1 1/1 Running 0 60m kube-system kube-apiserver-kubernetes-demo-master-1 1/1 Running 0 60m kube-system kube-controller-manager-kubernetes-demo-master-1 1/1 Running 0 60m kube-system kube-proxy-7fmbh 1/1 Running 0 60m kube-system kube-scheduler-kubernetes-demo-master-1 1/1 Running 0 60m root@kubernetes-demo-master-1:~# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-5b644bc49c-5pbnx 1/1 Running 0 100s kube-system calico-node-4shdh 1/1 Running 0 101s kube-system coredns-6955765f44-ngnnq 1/1 Running 0 61m kube-system coredns-6955765f44-rrngz 1/1 Running 0 61m kube-system etcd-kubernetes-demo-master-1 1/1 Running 0 61m kube-system kube-apiserver-kubernetes-demo-master-1 1/1 Running 0 61m kube-system kube-controller-manager-kubernetes-demo-master-1 1/1 Running 0 61m kube-system kube-proxy-7fmbh 1/1 Running 0 61m kube-system kube-scheduler-kubernetes-demo-master-1 1/1 Running 0 61m root@kubernetes-demo-master-1:~#

Alright! Our control plane is up and working now! Finally we can

join our worker node. Did you copy the join command the kubeadm output when

it completed it's bootstrap? If so, log into your worker node and run it

there. If you forgot to copy it down don't worry - you can always generate

a new joining token with kubeadm token create --print-join-command

on your master node.

root@kubernetes-demo-master-1:~# kubeadm token create --print-join-command kubeadm join 192.168.199.7:6443 --token f65ste.hrpatwe6krx716gk --discovery-token-ca-cert-hash sha256:1f4e1ce5f92488d8fe01065bd6e11212e30302e294d0a87052d8163f86f1e70b root@kubernetes-demo-master-1:~#

After running this on your worker node you should see something like

the following:

This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. root@kubernetes-demo-worker-1:~#

And when you run kubectl get nodes you should see both

your master node and your worker node in a ready state. Sometimes this

takes a few minutes so be patient.

root@kubernetes-demo-master-1:~# kubectl get nodes NAME STATUS ROLES AGE VERSION kubernetes-demo-worker-1 Ready85s v1.17.2 kubernetes-demo-master-1 Ready master 68m v1.17.2 root@kubernetes-demo-master-1:~#

We can also see that the cluster has already spun up some pods on

this new node.

root@kubernetes-demo-master-1:~# kubectl get pods -A -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-5b644bc49c-5pbnx 1/1 Running 0 19m 10.60.241.1 kubernetes-demo-master-1kube-system calico-node-4shdh 1/1 Running 0 19m 192.168.199.7 kubernetes-demo-master-1 kube-system calico-node-vtwv6 1/1 Running 0 2m11s 192.168.199.6 kubernetes-demo-worker-1 kube-system coredns-6955765f44-ngnnq 1/1 Running 0 78m 10.60.241.2 kubernetes-demo-master-1 kube-system coredns-6955765f44-rrngz 1/1 Running 0 78m 10.60.241.3 kubernetes-demo-master-1 kube-system etcd-kubernetes-demo-master-1 1/1 Running 0 79m 192.168.199.7 kubernetes-demo-master-1 kube-system kube-apiserver-kubernetes-demo-master-1 1/1 Running 0 79m 192.168.199.7 kubernetes-demo-master-1 kube-system kube-controller-manager-kubernetes-demo-master-1 1/1 Running 0 79m 192.168.199.7 kubernetes-demo-master-1 kube-system kube-proxy-7fmbh 1/1 Running 0 78m 192.168.199.7 kubernetes-demo-master-1 kube-system kube-proxy-gp9fs 1/1 Running 0 2m11s 192.168.199.6 kubernetes-demo-worker-1 kube-system kube-scheduler-kubernetes-demo-master-1 1/1 Running 0 79m 192.168.199.7 kubernetes-demo-master-1 root@kubernetes-demo-master-1:~#

We used the -o wide switch to show a little more information like

which node the pod was running on. We can see that Kubernetes automatically

created a calico-node pod and kube-proxy pod on kubernetes-demo-worker-1.

You've now successfully deployed Kubernetes! If you want to add

another worker you can simply spin another virtual machine and install

docker, kubeadm, kubelet, and kubectl like in the instructions above. Then

you can use the same join command to add it to your cluster.

In the future I will explore Calico and other Kubernetes subjects. I

hope you have found this useful and good luck learning Kubernetes!